Why Voice AI is India’s real OS

Back in 2022, one day, seemingly out of nowhere, my driver couldn’t find my number in his phone. It was strange because he had been with my family for a few years, and he’d always been able to reach me on WhatsApp.

So naturally, I asked him what happened because I hadn’t changed my number. What he said revealed more about India than reading 50+ industry reports could’ve ever told me. He said, “Didi apne WhatsApp se DP hata di toh number nahi mil raha.” (You removed your WhatsApp DP, so I couldn’t find your number). Curious, I dug into this, and this is what he revealed about how he uses WhatsApp:

Recognises the WhatsApp app from the logo > looks for the relevant person based on their dp > and sends messages through voice notes

This was a eureka moment. A guy with minimal literacy could somehow use the phone, not because he learnt how to use it, but because he had developed his hacky ways around it. Have you ever wondered why delivery drivers and such people always tell you to send a ‘Hi’ on WhatsApp when they need to start a conversation with you? Exactly.

Until recently, we couldn't do much with voice at scale. Recorded voice notes took too long to process, generative voice sounded robotic, and the latency made conversations feel unnatural. That has changed dramatically in the past year.

Voice AI models now deliver ~200ms latency, which is roughly the human conversation speed. LLMs can summarise complex discussions in seconds. Natural barge-in and emotional tone awareness make AI voices feel genuinely alive. Most remarkably, most of the Bharat can no longer distinguish between human and AI voices in their native languages. Packy McCormick did a fun experiment where he got the folks at ElevenLabs to generate his voice. Surprisingly, even Packy’s mom couldn’t tell the difference!

This technical breakthrough unlocks two categories for Indian businesses:

#1 Voice data as India's untapped goldmine

Traditional NLP only worked with structured data - "Press 1 for Hindi" kind of interactions. But real Indian conversations are messy: code-switching between languages, interruptions, incomplete thoughts, and cultural context that exists between the words.

LLMs thrive in this chaos. They can process raw and unstructured voice transcripts to extract:

Intent detection ("customer frustrated about delayed delivery")

Entity extraction ("order #5678, Vasant Kunj address")

Outcome summarisation ("unresolved, escalate to Tier 2")

Our favorite example for this is Storefox.ai, which records in-store conversations at QSR restaurants through strategically placed microphones. Their AI analyses these conversations to flag stockouts, detect missed upselling opportunities, and surface customer demand patterns. Instead of waiting for monthly sales reports, restaurant owners can get leading indicators from customer conversations in real-time.

Shark Tank featured NeoSapien has also created a locket that you can wear to passively record all conversations you have throughout the day, capitalising on impromptu conversations that happen away from a laptop! The idea is to use the recorded conversations to become more emotionally intelligent and intentional with your conversations.

This transforms every conversation into structured business intelligence, something traditional NLP could never deliver at this scale or accuracy.

#2: AI has become indistinguishable from humans

The second breakthrough is even more profound: voice AI has crossed the uncanny valley. Modern text-to-speech powered by LLMs now generates voices so human-like that most Indians cannot distinguish between AI and human speakers - especially in regional languages. When technology feels genuinely human, it stops being foreign and begins to feel familiar. For Bharat, this changes everything.

Take BookingJini's approach with hotel owners. These owners follow the same daily ritual: first call to set operations, last call for updates. BookingJini's AI manager sounds human & behaves like the ideal manager they've always wanted to hire but couldn't afford. "What's today's occupancy rate?" gets an instant and accurate response. Commands like "Please book room 712 for Mr. Bhatnagar" can get executed across systems.

Similarly, Kookar transforms household kitchen management through voice-first coordination. Every Indian household with a cook faces daily chaos around meal planning and grocery coordination. Kookar's AI assistant communicates with cooks in local languages, handling everything from menu planning to inventory management to autonomous grocery ordering across platforms. The cook converses naturally about daily needs while AI orchestrates the complex coordination behind the scenes.

This represents the missing piece in India's digital transformation: not forcing people to learn new interfaces, but making intelligent coordination feel as natural as a phone call. The owner converses naturally, the AI coordinates systematically, and the business runs smoothly - all because the technology has become invisible.

The economics of scale

Voice AI follows classic network economics. Initial costs are brutal as streaming ASR, LLM reasoning, and TTS together can cost $0.07–$0.15 per minute. For bootstrapped startups, this math will simply not work. But this changes at scale, because:

Monetisation can improve through cross-selling and workflow automation

Enterprise pricing discounts kick in

Smaller, fine-tuned models reduce operational costs

More data improves accuracy, reducing error-related expenses

Scale itself becomes a competitive moat

This economic reality means voice AI won't be democratised through a thousand small experiments. It will be built by companies that can reach scale quickly - either through venture funding or by starting with high-value use cases that justify the initial costs. The winners will be those who crack the unit economics first, then expand horizontally across India's vast intermediary economy.

So, what’s the opportunity?

Two of the tools in the GC AI stack that we absolutely love are Granola and Wispr Flow. Granola shows how meetings can be silently captured and summarised, while Wisprflow uses dictation to power workflows for professionals. Both hint at what Bharat-specific tools could look like in local languages, but for Bharat, we need voice models with regional datasets that deeply understand user behaviour and integrate within it, instead of changing it.

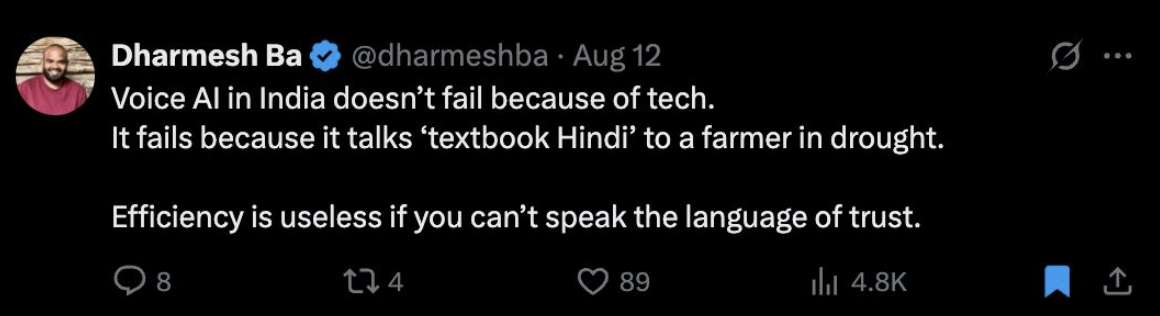

This is where India's structural advantage becomes clear. While global voice AI companies optimise for English-speaking professionals, Indian startups can build for the 90% who think and communicate differently. The driver navigating through WhatsApp DPs, the cook coordinating meal plans, the hotel owner managing operations - they don't need to learn new interfaces. They need technology that speaks their language, literally and culturally.